Mastering Function Calling with OpenAI APIs: A Deep Dive

In this blog, we explore in meticulous detail how function calling works in OpenAI’s models, using Python. Function calling allows us to instruct the model to invoke functions dynamically based on user requests. We’ll walk through the entire process from setup, defining functions, integrating tools, and handling responses.

1. What is Function Calling in OpenAI APIs?

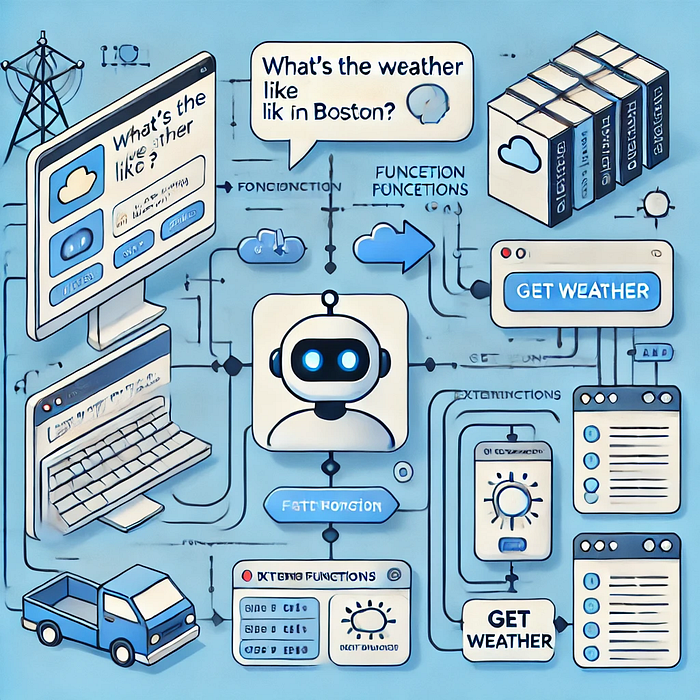

Function calling is a feature that allows OpenAI models to interact with external systems or functions programmatically. The idea is to let the model decide when to call an external function and how to process its result. For example, if a user asks the model for the weather, the model can detect that it needs to retrieve live data from a weather API.

With function calling:

- The model reads the user input (e.g., “What’s the weather in Boston?”).

- Based on internal logic and function metadata, it decides if a function call is needed.

- It triggers the function, passes the required arguments, and integrates the function’s output back into the conversation.

Key Components:

- Tools: Functions that the model can invoke.

- Arguments: Data passed into the functions.

- Role-based messaging: Structured interactions where the user, assistant, and tool have specific roles.

2. Step-by-Step Guide

Let’s now get into the implementation details.

Step 1: Setting Up the Environment

First, we need to load the necessary libraries and API credentials. In this case, we use dotenv to safely load our OpenAI API key from an environment variable.

import os

import openai

import json

from dotenv import load_dotenv

# Load the environment variables from a .env file

load_dotenv()

os.environ['OPENAI_API_KEY'] = ""Explanation:

os: Used to interface with the operating system, particularly to access environment variables.openai: The official library to communicate with OpenAI’s API.json: To handle JSON responses for data transmission.dotenv: To securely load environment variables like API keys.

Step 2: Defining the API Call Wrapper

Next, we define a helper function that sends messages and metadata to OpenAI’s chat model. This function will wrap the openai.chat.completions.create API.

def get_completion(messages, model="gpt-3.5-turbo-1106", temperature=0, max_tokens=300, tools=None, tool_choice=None):

response = openai.chat.completions.create(

model=model,

messages=messages,

temperature=temperature,

max_tokens=max_tokens,

tools=tools,

tool_choice=tool_choice

)

return response.choices[0].messageKey Parameters:

model: The model to use (in this case, we specifygpt-3.5-turbo-1106, which supports function calling).messages: A list of conversation messages. The user’s inputs and assistant’s responses are stored here.temperature: Controls randomness (set to 0 for deterministic responses).max_tokens: Restricts the number of tokens in the response (in this case, set to 300).tools: A list of functions the model can call.tool_choice: Optional. Specifies which tool should be used.

Step 3: Creating a Dummy Weather Function

We simulate an external function that returns dummy weather data for a specific location. This will demonstrate how OpenAI can integrate function outputs.

def get_current_weather(location, unit="fahrenheit"):

"""Get the current weather in a given location."""

weather = {

"location": location,

"temperature": "50", # Simulated temperature

"unit": unit,

}

return json.dumps(weather)Explanation:

location: The city/state (or country) for which weather is requested.unit: The temperature unit (fahrenheitby default).json.dumps(): Converts the weather data into a JSON string, mimicking an API response.

Step 4: Defining Tools for Function Calling

Here’s the key part — defining the tools (functions) the model can use. This is essential because the model needs to know which functions are available and how to call them.

tools = [

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Fetches the current weather for a given location and unit (fahrenheit or celsius).",

"parameters": {

"location": {"type": "string", "description": "The city and state or city and country."},

"unit": {"type": "string", "description": "Temperature unit (fahrenheit, celsius)."}

}

}

}

]Detailed Breakdown:

type: Specifies the type of tool (in this case, afunction).function: Defines the function's metadata:name: The name of the function the model will invoke (must match the Python function).description: A textual explanation of what the function does. The model uses this to determine if this function should be called based on the user's request.parameters: The arguments required by the function.location: A string input that defines the geographical location.unit: Specifies the temperature unit.

This metadata helps the model identify when to invoke the function and what arguments to pass.

Step 5: Structuring the Conversation

Now, let’s build the structure of the conversation. This will include the user’s question and the assistant’s ability to detect the function it needs to call.

messages = [

{"role": "user", "content": "What's the weather like in Boston!"},

{

"role": "assistant",

"tool_calls": [

{

"id": "call_knYCGz82U0ju4yNjqfbsLiJq",

"function": {

"arguments": '{"location":"Boston, MA"}',

"name": "get_current_weather"

}

}

]

}

]Explanation:

role: Defines who is speaking. In this case:user: Represents the user's question: “What’s the weather in Boston?”assistant: The assistant detects the need to call theget_current_weatherfunction, passing the argumentlocation: Boston, MA.

The tool_calls section allows the assistant to propose which function it should call, along with the function’s arguments.

Step 6: Calling the API

With the conversation structured and the tools defined, we can now make the API call using the helper function:

final_response = get_completion(messages, tools=tools)Here, the model receives the messages and tool definitions and decides whether the tool needs to be called. The assistant now has everything it needs to form the final response.

Step 7: Parsing the Model Response

When the assistant calls a function, we need to retrieve the result from the function and pass it back into the conversation. Here’s how we handle that:

weather = get_current_weather(messages[1]["tool_calls"][0]["function"]["arguments"])

messages.append({

"role": "tool",

"tool_call_id": assistant_message["tool_calls"][0]["id"],

"name": assistant_message["tool_calls"][0]["function"]["name"],

"content": weather

})Step-by-step breakdown:

get_current_weather: Calls the dummy weather function with the provided location.- Appending to

messages: Adds the function’s output back into the conversation under thetoolrole.

Finally, you can generate the assistant’s response using:

final_response = get_completion(messages, tools=tools)

print(final_response)3. Detailed Breakdown of Each Step

Tool Metadata:

The model relies heavily on the metadata for each function. It parses the tool’s description and parameters to decide when and how to call it. Therefore, crafting accurate descriptions and defining parameters clearly is crucial.

The Role of Messages:

The messages array is critical because it defines the conversation history. The model analyzes these messages to decide when to make a function call. Each message has a role, which could be:

- User: The end-user’s inputs.

- Assistant: The responses generated by the model.

- Tool: The output from an external function.

4. Challenges & Best Practices

Challenges:

- Tool Identification: If the tool metadata is poorly described, the model may fail to call the correct function.

- Argument Mismatch: Ensure that the arguments passed from the assistant match the parameters expected by the function. This can lead to issues if the assistant is unable to properly format the input.

- Rate Limits: OpenAI’s API has rate limits that you should be aware of when making multiple requests in production.

Best Practices:

- Accurate Tool Descriptions: Always be precise when defining tools. Clearly explain the function’s purpose and its parameters.

- Thorough Testing: Test the model’s ability to correctly invoke tools and ensure the function’s output is handled gracefully.

- Handling Errors: Implement error handling for cases where the model calls a tool with incorrect arguments.

5. Conclusion

This blog walked through a detailed and structured example of how to integrate function calling with OpenAI APIs. The ability to call external functions dynamically makes the models significantly more powerful and adaptable to real-world applications. Whether it’s retrieving live data or performing complex calculations, function calling opens up many possibilities for expanding a model’s capabilities.

By following the steps above, you can integrate your own tools into the OpenAI model and leverage them to make your assistant smarter and more interactive.

For similar work related discussion:

kshitijkutumbe@gmail.com