LangGraph AI agents : Building a Dynamic Order Management System : A Step-by-Step Tutorial

In this extremely detailed tutorial, we’ll explore LangGraph — a powerful library for orchestrating complex, multi-step workflows with Large Language Models (LLMs) — and apply it to a common e-commerce problem statement: deciding whether to place or cancel an order based on a user’s query. By the end of this blog, you’ll understand how to:

- Set up LangGraph in a Python environment.

- Load and manage data (e.g., inventory and customers).

- Define nodes (the individual tasks in your workflow).

- Build a graph of nodes and edges, including conditional branching.

- Visualize and test the workflow.

We’ll proceed step by step, explaining every concept in detail — perfect for beginners and those looking to build dynamic or cyclical workflows using LLMs and I have also included link to the dataset for trying this out.

Table of Contents

The Problem Statement: Order Management

Data Loading and State Definition

Creating Tools and LLM Integration

What Is LangGraph?

LangGraph is a library that brings a graph-based approach to LangChain workflows. Traditional pipelines often move linearly from one step to another, but real-world tasks frequently require branching, conditional logic, or even loops (retrying failed steps, clarifying user input, etc.).

Key features of LangGraph:

- Nodes: Individual tasks or functions (e.g., check inventory, compute shipping).

- Edges: Define the flow of data and control between nodes. Can be conditional.

- Shared State: Each node can return data that updates a global state object, avoiding manual data passing.

- Tool Integration: Easily incorporate external tools or functions that an LLM can call.

- Human-In-The-Loop (optional): Insert nodes that require human review.

The Problem Statement: Order Management

In this scenario, a user’s query can either be about placing a new order or canceling an existing one:

- PlaceOrder: Check item availability, compute shipping, and simulate payment.

- CancelOrder: Extract an

order_idand mark the order as canceled.

Because we have to branch (decide “PlaceOrder” vs. “CancelOrder”), we’ll use LangGraph to create a conditional flow:

- Categorize query.

- If PlaceOrder, move to check inventory, shipping, and payment.

- If CancelOrder, parse out

order_idand call a cancellation tool.

Imports Explanation

Below is the exact first part of the code you provided, showcasing the imports and environment setup. We have added commentary after the code to explain each segment.

# Import required libraries

import os

import pandas as pd

import random

from langchain_core.tools import tool

from langchain_openai import ChatOpenAI

from langgraph.prebuilt import ToolNode

from langgraph.graph import StateGraph, MessagesState, START, END

from langchain_core.runnables.graph import MermaidDrawMethod

from IPython.display import display, Image

from typing import Literal

from langchain_core.prompts import ChatPromptTemplate

from typing import Dict, TypedDict

# Load environment variables

os.environ["OPENAI_API_KEY"] = ""langchain_core.tools, langchain_openai, ToolNode, etc.:

tool(a decorator) converts Python functions into “tools” an LLM can call.ChatOpenAIis our LLM client to talk to GPT models.ToolNodeis a pre-built node fromlanggraph.prebuiltthat handles tool execution.StateGraph,MessagesState,START,ENDcome fromlanggraph.graph—they’re crucial for defining our workflow.MermaidDrawMethodhelps visualize the workflow as a Mermaid.js diagram.

Data Loading and State Definition

Link for data: Data

In the next snippet, we load CSV files (for inventory and customers) and convert them to dictionaries. We also define our State typed dictionary.

# Load datasets

inventory_df = pd.read_csv("inventory.csv")

customers_df = pd.read_csv("customers.csv")

# Convert datasets to dictionaries

inventory = inventory_df.set_index("item_id").T.to_dict()

customers = customers_df.set_index("customer_id").T.to_dict()

class State(TypedDict):

query: str

category: str

next_node: str

item_id: str

order_status: str

cost: str

payment_status: str

location: str

quantity: intCSV to Dictionaries:

inventoryandcustomersare dictionaries keyed byitem_idorcustomer_id. This makes it easy to do lookups likeinventory[item_51].

State:

- A typed dictionary so we know which fields to expect. For instance,

query,category,item_id, etc. categoryis typically “PlaceOrder” or “CancelOrder.”next_nodecan store the next node name, though we rely on the graph’s edges for transitions.- This helps keep track of everything — inventory checks, payment status, etc. — in a single object.

Creating Tools and LLM Integration

Now we define our LLM and tools. The main tool here is cancel_order, which uses the LLM to extract an order_id from the query.

@tool

def cancel_order(query: str) -> dict:

"""Simulate order cancelling"""

order_id = llm.with_structured_output(method='json_mode').invoke(f'Extract order_id from the following text in json format: {query}')['order_id']

#amount = query.get("amount")

if not order_id:

return {"error": "Missing 'order_id'."}

return {"order_status": "Order stands cancelled"}

# Initialize LLM and bind tools

llm = ChatOpenAI(model="gpt-4o-mini", temperature=0)

tools_2 = [cancel_order]

llm_with_tools_2 = llm.bind_tools(tools_2)

tool_node_2 = ToolNode(tools_2)@tool:

- The

cancel_orderfunction is now a tool the LLM can invoke if it decides that an order cancellation is needed.

Extracting order_id:

- We call

llm.with_structured_output(method='json_mode')to instruct the LLM to return JSON. Then we parse out the'order_id'.

LLM Initialization:

model="gpt-4o-mini"is the chosen model, withtemperature=0for deterministic responses.

Binding and ToolNode:

llm.bind_tools(tools_2)connects our LLM with thecancel_ordertool.ToolNodeis a specialized node that can handle these bound tools automatically.

Defining Workflow Nodes

We’ll now start defining nodes one by one.

Model Call Nodes

These nodes have access to call models

def call_model_2(state: MessagesState):

"""Use the LLM to decide the next step."""

messages = state["messages"]

response = llm_with_tools_2.invoke(str(messages))

return {"messages": [response]}

def call_tools_2(state: MessagesState) -> Literal["tools_2", END]:

"""Route workflow based on tool calls."""

messages = state["messages"]

last_message = messages[-1]

if last_message.tool_calls:

return "tools_2"

return ENDcall_model_2: Takes the conversation (messages) and passes it to the LLM with the bound tools. If the LLM triggers a tool call, we’ll detect that incall_tools_2.call_tools_2: Checks if the LLM has requested a tool call (tool_calls). If so, we route to"tools_2", which is theToolNode; otherwise, we end the workflow.

Categorize Query

Here, we define a node for categorizing the query:

def categorize_query(state: MessagesState) -> MessagesState:

"""Categorize user query into PlaceOrder or CancelOrder"""

prompt = ChatPromptTemplate.from_template(

"Categorize user query into PlaceOrder or CancelOrder"

"Respond with either 'PlaceOrder', 'CancelOrder' Query: {state}"

)

chain = prompt | ChatOpenAI(temperature=0)

category = chain.invoke({"state": state}).content

return {"query":state,"category": category}- This node uses the LLM to classify the user’s query. The return value sets

"category"in the state.

Check Inventory

def check_inventory(state: MessagesState) -> MessagesState:

"""Check if the requested item is in stock."""

item_id = llm.with_structured_output(method='json_mode').invoke(f'Extract item_id from the following text in json format: {state}')['item_id']

quantity = llm.with_structured_output(method='json_mode').invoke(f'Extract quantity from the following text in json format: {state}')['quantity']

if not item_id or not quantity:

return {"error": "Missing 'item_id' or 'quantity'."}

if inventory.get(item_id, {}).get("stock", 0) >= quantity:

print("IN STOCK")

return {"status": "In Stock"}

return {"query":state,"order_status": "Out of Stock"}- Tries to parse

item_idandquantityfrom the conversation. - Checks

inventory[item_id]["stock"]to confirm availability.

Compute Shipping

We have defined a node for calculating shipping cost for the specific customer

def compute_shipping(state: MessagesState) -> MessagesState:

"""Calculate shipping costs."""

item_id = llm.with_structured_output(method='json_mode').invoke(f'Extract item_id from the following text in json format: {state}')['item_id']

quantity = llm.with_structured_output(method='json_mode').invoke(f'Extract quantity from the following text in json format: {state}')['quantity']

customer_id = llm.with_structured_output(method='json_mode').invoke(f'Extract customer_id from the following text in json format: {state}')['customer_id']

location = customers[customer_id]['location']

if not item_id or not quantity or not location:

return {"error": "Missing 'item_id', 'quantity', or 'location'."}

weight_per_item = inventory[item_id]["weight"]

total_weight = weight_per_item * quantity

rates = {"local": 5, "domestic": 10, "international": 20}

cost = total_weight * rates.get(location, 10)

print(cost,location)

return {"query":state,"cost": f"${cost:.2f}"}- Retrieves the customer_id from the user’s query, then looks up their location in the

customersdictionary. - Calculates shipping cost based on the item’s weight, the quantity, and the user’s location.

Process Payment

We’ll define a node for processing payment:

def process_payment(state: State) -> State:

"""Simulate payment processing."""

cost = llm.with_structured_output(method='json_mode').invoke(f'Extract cost from the following text in json format: {state}')

if not cost:

return {"error": "Missing 'amount'."}

print(f"PAYMENT PROCESSED: {cost} and order successfully placed!")

payment_outcome = random.choice(["Success", "Failed"])

return {"payment_status": payment_outcome}- Uses

random.choiceto simulate success or failure. - In a production system, you’d integrate with a real payment gateway.

Routing Function

We now define a node for routing queries:

def route_query_1(state: State) -> str:

"""Route the query based on its category."""

print(state)

if state["category"] == "PlaceOrder":

return "PlaceOrder"

elif state["category"] == "CancelOrder":

return "CancelOrder"- Decides which path to follow next: “PlaceOrder” or “CancelOrder.” In LangGraph, we’ll map “PlaceOrder” to the CheckInventory node and “CancelOrder” to the CancelOrder node.

Building the Workflow Graph

Below, we create a StateGraph, add nodes, and define edges and conditional edges.

# Create the workflow

workflow = StateGraph(MessagesState)

#Add nodes

workflow.add_node("RouteQuery", categorize_query)

workflow.add_node("CheckInventory", check_inventory)

workflow.add_node("ComputeShipping", compute_shipping)

workflow.add_node("ProcessPayment", process_payment)

workflow.add_conditional_edges(

"RouteQuery",

route_query_1,

{

"PlaceOrder": "CheckInventory",

"CancelOrder": "CancelOrder"

}

)

workflow.add_node("CancelOrder", call_model_2)

workflow.add_node("tools_2", tool_node_2)

# Define edges

workflow.add_edge(START, "RouteQuery")

workflow.add_edge("CheckInventory", "ComputeShipping")

workflow.add_edge("ComputeShipping", "ProcessPayment")

workflow.add_conditional_edges("CancelOrder", call_tools_2)

workflow.add_edge("tools_2", "CancelOrder")

workflow.add_edge("ProcessPayment", END)StateGraph(MessagesState):

- We specify

MessagesStateto hold conversation data.

Nodes:

RouteQueryis the entry node that categorizes the user’s intent.- “CheckInventory,” “ComputeShipping,” and “ProcessPayment” handle the PlaceOrder flow.

- “CancelOrder” and “tools_2” handle the CancelOrder flow.

Conditional Edges:

- The call to

workflow.add_conditional_edges("RouteQuery", route_query_1, ...)ensures we move to CheckInventory if it’s a “PlaceOrder” or CancelOrder if it’s a “CancelOrder.”

Looping:

- When the user hits “CancelOrder,” we check if the LLM triggered a tool call (

call_tools_2). If it did, we go totools_2(theToolNode); after the tool is invoked, it goes back to “CancelOrder,” giving the LLM an opportunity to produce further actions or end.

Finish:

- “ProcessPayment” leads to

END, concluding the “PlaceOrder” path.

Visualizing and Testing the Workflow

The next snippet compiles the workflow into an agent, renders it as a Mermaid diagram, and tests it with sample queries.

# Compile the workflow

agent = workflow.compile()

# Visualize the workflow

mermaid_graph = agent.get_graph()

mermaid_png = mermaid_graph.draw_mermaid_png(draw_method=MermaidDrawMethod.API)

display(Image(mermaid_png))

# Query the workflow

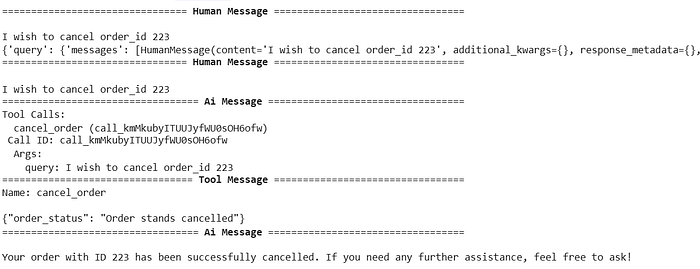

user_query = "I wish to cancel order_id 223"

for chunk in agent.stream(

{"messages": [("user", user_query)]},

stream_mode="values",

):

chunk["messages"][-1].pretty_print()

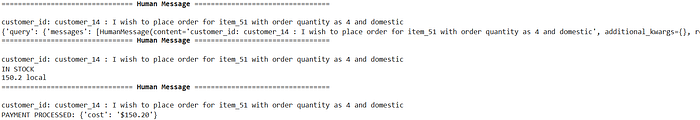

auser_query = "customer_id: customer_14 : I wish to place order for item_51 with order quantity as 4 and domestic"

for chunk in agent.stream(

{"messages": [("user", auser_query)]},

stream_mode="values",

):

chunk["messages"][-1].pretty_print()Compile:

agent = workflow.compile()transforms our node/edge definitions into an executable agent.

Visualize:

- We get a Mermaid diagram (

mermaid_png) which we can display in a Jupyter notebook for debugging or demonstrations.

Test Queries:

- First test: “I wish to cancel order_id 223” should route to

CancelOrder.

- Second test: “customer_id: customer_14 : I wish to place order for item_51…” should route to the place-order workflow.

Conclusion:

By leveraging LangGraph, we’ve built a dynamic, branching workflow that either places or cancels orders based on user intent. We demonstrated:

- How to categorize queries with an LLM node (

categorize_query). - How to bind a tool (

cancel_order) and integrate it into the workflow. - How to check inventory, compute shipping, and process payment via separate nodes.

- How to visualize the entire workflow with Mermaid.js.

This approach is scalable: you can add more steps (e.g., address verification, promotional codes) or additional branches (e.g., update an existing order) without rewriting a monolithic script. If you need loops for retrying failed payments or verifying user confirmations, LangGraph can handle that as well.